Webinar

When GenAI Meets Risky APIs

Sept 26th, 2024

PDT 9am | EDT 12pm | BST 5pm

Watch the Webinar

As Generative AI adoption grows across the enterprise, so does the risk surface for potential data breaches and attacks. API security is a must have if you want to enable the responsible and effective deployment of GenAI technology.

Large Language Models (LLMs) excel at processing and understanding unstructured data in order to generate coherent and context-specific text. Yet the real power of an LLM comes when it’s connected to enterprise data sources. These connections are typically enabled by APIs and configured as plugins to the LLM. Critically, if the underlying APIs are vulnerable, they may be exploited by anyone with access to the LLM and can have disastrous consequences.

Join us for this interactive session as we demonstrate how GenAI can be used to exploit unsecured APIs to gain unauthorized access, inject malicious prompts and manipulate data. Also learn how to prevent your APIs from being undermined by adopting a proactive API security as code approach to defending your APIs.

Why Attend?

- Understand how LLMs can increase the attack surface of your APIs

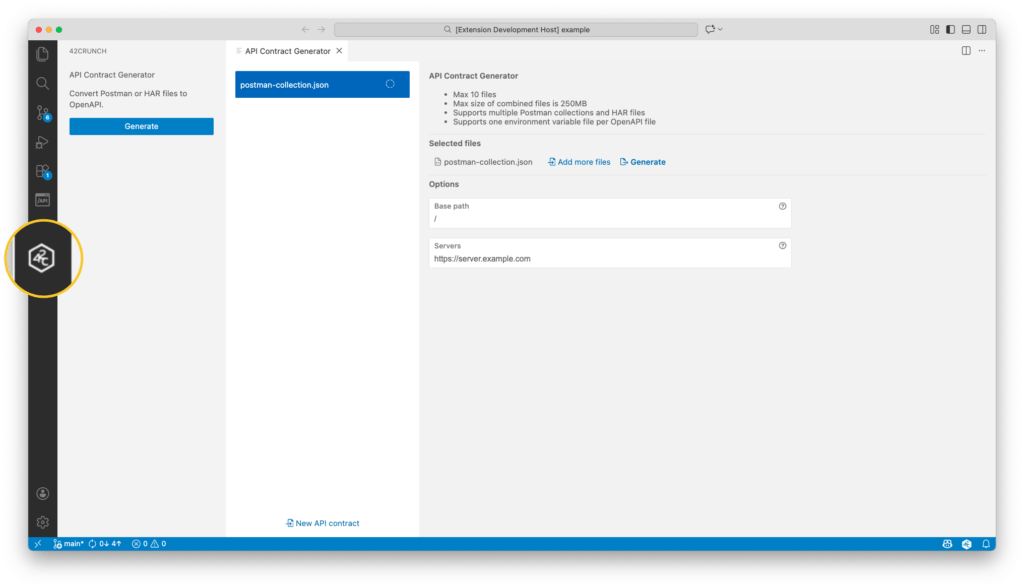

- Understand how continuous API security prevents data leakage through LLMs

- See how the API contract (Swagger definition) is the authoritative source used by the LLM when interacting with the API and should therefore be verified to be secure, accurate, and complete

Related Content

Speaker

VP Business Development

42Crunch

Solution Engineer

42Crunch