How to secure your APIs from GenAI and LLM based attacks

Generative AI (GenAI) and Large Language Models (LLMs) are transforming the enterprise landscape, enhancing customer and employee experiences with unprecedented efficiency and insight. The recent McKinsey Global survey on AI reports that 65 percent of respondents say that their organizations are regularly using GenAI, nearly double the percentage from their previous survey just ten months ago1. However, while businesses rush to integrate these technologies, they often overlook a critical vulnerability: the APIs that connect LLMs to enterprise data. These connections are powerful but fraught with risk, as unsecured APIs can expose sensitive data, create unauthorized access points, and amplify vulnerabilities.

Focus on Fundamentals: Securing APIs Overlooked Amid AI Risks

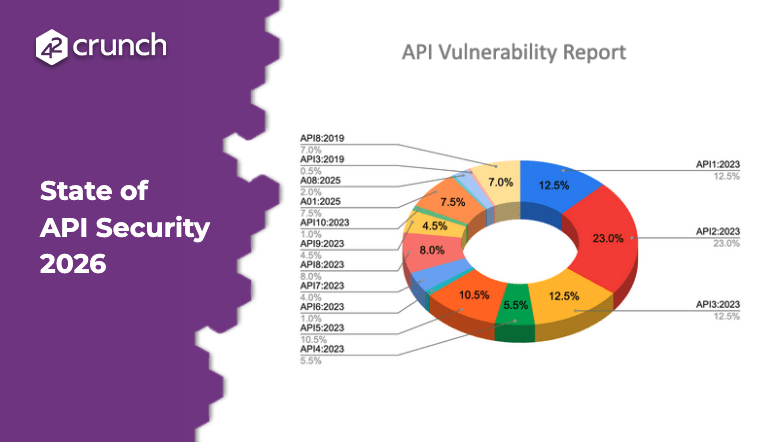

Much of today’s security research is focused on sophisticated AI security topics like preventing model poisoning, bias, and hallucinations. While these concerns are important, they can overshadow a more fundamental issue: securing the APIs that LLMs rely on. APIs are a key component of LLM models as they’re the means by which an LLM model communicates with other systems and applications. If these underlying APIs are vulnerable, the risk of a breach increases dramatically. It’s critical to address API security to ensure a strong foundation; otherwise, even the most secure AI models can be compromised through overlooked, insecure API connections. API security isn’t just about protecting APIs; it’s also about ensuring they’re functioning correctly and efficiently. Embedding testing and monitoring as part of the API lifecycle can help identify any potential issues or inefficiencies, ensuring that the APIs used by the LLM systems are reliable and secure.

Why Traditional Testing Falls Short

Many organizations still depend on generic dynamic application security testing (DAST) tools to protect their APIs, but they lack the sophistication to address API-specific vulnerabilities, particularly those exposed through LLM interactions. These legacy tools often fail to detect complex API attacks, resulting in high false positives and missing critical security gaps.

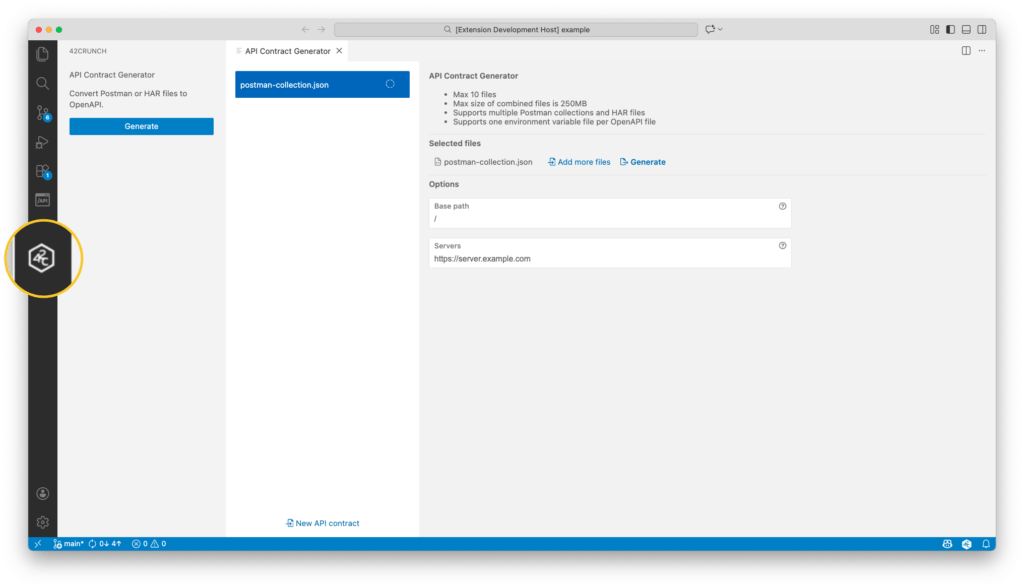

Leveraging OpenAPI for Effective Security

With APIs being structured and documented through standards like OpenAPI Specification (OAS), there’s an opportunity to elevate security practices. The OAS is not just critical for security testers; it is also the authoritative source that LLMs use to understand how the API functions. This makes it imperative that the OAS itself is verified to be secure, accurate, and complete. By ensuring that the OAS accurately reflects the API’s functionality without exposing unnecessary details, security teams can craft precise, automated tests that protect against vulnerabilities unique to APIs connected with LLMs.

A Call to Action

As enterprises increasingly integrate LLMs, securing the APIs that connect them to critical data sources is non-negotiable. A robust approach to API security that includes continuous testing and hardening, guided by standards like OAS, is essential. At 42Crunch, we are committed to helping organizations secure their APIs, ensuring that they are prepared to meet the demands of today’s AI-driven world without compromising security.

1https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

Webinar Recording: When GenAI Meets Risky APIs

Webinar Recording: OWASP API Top 10 2023